As we finish our first week back at work in 2015, we thought it might be nice to reflect on what we accomplished in 2014 and what our resolutions are for this year.

Looking Back

Carrie

As I type this I am sitting in a living room piled high with boxes and strewn with bubble wrap and packing tape. I finished my six and a half year run at Columbia on Friday and will be starting a new position at Emory University’s Manuscript, Archives, & Rare Book Library at the beginning of next month.

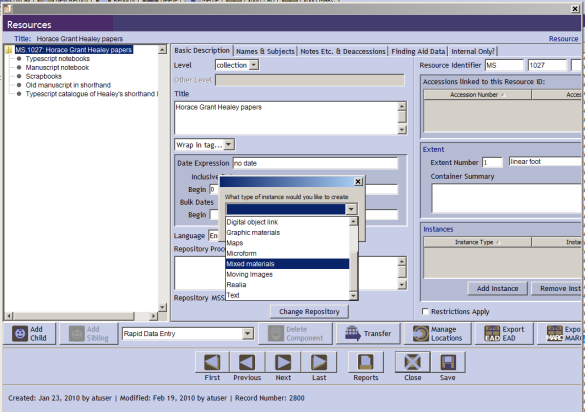

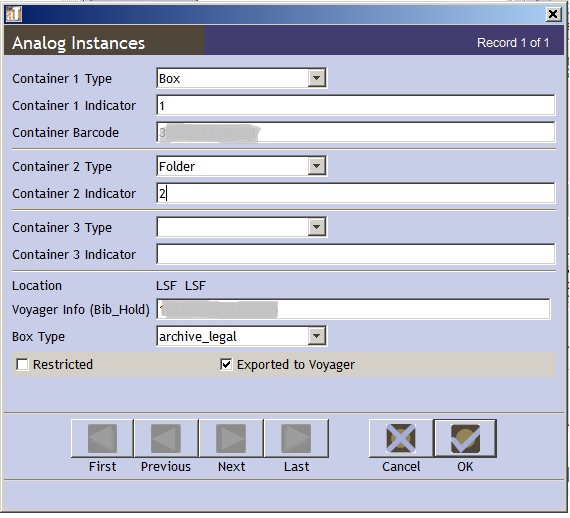

This past year was full of professional changes. I got a new director, moved offices, our library annexed another unit which landed under my supervision, and our University Librarian retired at the end of the year. Amidst all of this, though, my team and I managed to hit some pretty major milestones in the middle of the chaos and change-related-anxiety. We completed a comprehensive collection survey that resulted in DACS compliant collection level records for all of our holdings, we published our 1000th EAD finding aid, and kept up with the 3000 plus feet of accessions that came through our doors.

Cassie

Last year I spent a lot of time learning how to work with data more effectively (in part thanks to this blog!) I used OpenRefine and regular expressions to clean up accessions data. Did lots of ArchivesSpace planning, mapping, and draft policy work. Supervised an awesome field study. Participated in our Aeon implementation. Began rolling out changes to how we create metadata for archival collections and workflows for re-purposing the data. I also focused more than I ever have before on advocating for myself and the functions I oversee. This included a host of activities, including charting strategic directions, but mainly comprised lots of small conversations with colleagues and administrators about the importance of our work and the necessity to make programmatic changes. I also did a ton of UMD committee work. Oh, and got married! That was pretty happy and exciting.

Maureen

2014 was my sixth year working as a professional archivist, and continued my streak (which has finally ended, I swear) of being a serial short-timer. Through June of last year, I worked with a devoted team of archives warriors at the Tamiment Library and Robert F. Wagner Labor Archives. There, we were committed to digging ourselves out of the hole of un-described resources, poor collection control, and an inconsistent research experience. Hence, my need for this blog and coterie of smart problem solvers. I also gave a talk at the Radcliffe Workshop on Technology and Archival Processing in April, which was an archives nerd’s dream — a chance to daydream, argue, and pontificate with archivists way smarter than I am.

In June I came to Yale — a vibrant, smart, driven environment where I work with people who have seen and done it all. And I got to do a lot of fun work where I learned more about technology, data, and archival description to solve problems. And I wrote a loooot of blog posts about how to get data in and out of systems.

Meghan

It kind of feels like I did nothing this past year, other than have a baby and then learn how to live like a person who has a baby. 2014 was exhausting and wonderful. I still feel like I have a lot of tricks to learn about parenting; for example, how to get things done when there is a tiny person crawling around my floor looking for things to eat.

Revisiting my Outlook calendar reminds me that even with maternity leave, I had some exciting professional opportunities. I proposed, chaired, and spoke at a panel on acquisition, arrangement, and access for sexually explicit materials at the RBMS Conference in Las Vegas, and also presented a poster on HistoryPin at the SAA Conference in Washington, D.C. Duke’s Technical Services department continues to grow, so I served on a number of search committees, and chaired two of them. I continue to collaborate with colleagues to develop policies and guidelines for a wide range of issues, including archival housing, restrictions, description, and ingest. And we are *this close* to implementing ArchivesSpace, which is exciting.

Looking Forward

Carrie

I have so much to look forward to this year! I’m looking forward to learning a new city, to my first foray into the somewhat dubious joys of homeownership, and to being within easy walking distance of Jeni’s ice cream shop. And that’s all before I even think about my professional life. My new position oversees not only archival processing, but also cataloging and description of MARBL’s print collections so I will be spending a lot of time learning about about rare book cataloging and thinking hard about how to streamline resource description across all formats.

Changing jobs is energizing and disruptive in the best possible way so my goal for the year is to settle in well and to learn as much much as possible– from my new colleagues, from my old friends, and from experts and interested parties across the profession.

Cassie

I am super excited to be starting at the Orbis Cascade Alliance as a Program Manager in February. I’ll be heading up the new Collaborative Workforce Program covering the areas of shared human resources, workflow, policy, documentation, and training. The Alliance just completed migrating all 37 member institutions to a shared ILS. This is big stuff and a fantastic foundation to analyze areas for collaborative work.

While I can’t speak to specific goals yet, I know I will be spending a lot of time listening and learning. Implementing and refining a model for shared collaborative work is a big challenge, but has huge potential on so many fronts. I’m looking forward to learning from so many experts in areas of librarianship outside of my experiences/background. I’m also thrilled to be heading back to the PNW and hoping to bring a little balance back to life with time in the mountains and at the beach.

Maureen

I have a short list of professional resolutions this year. Projects, tasks and a constant stream of email has a way of overshadowing what’s really important — I’ll count on my fellow bloggers to remind me of these priorities!

- All ArchivesSpace, all the time. Check out the ArchivesSpace @ Yale blog for more information about this process.

- I want to create opportunities for myself for meaningful direct interaction with researchers so that their points of view can help inform the decisions we make in the repository. This may mean that I take more time at the reference desk, do more teaching in classes, or find ways to reach out and understand how I can be of better service.

- I want to develop an understanding of what the potential is for archival data in a linked data environment. I want to develop a vision of how we can best deploy this potential for our researchers.

- I have colleagues here at Yale who are true experts at collection development — I want to learn more about practices, tips, tricks, pitfalls, and lessons learned.

Meghan

I have a few concrete professional goals for the coming year:

- I want to embrace ArchivesSpace and learn to use it like an expert.

- I will finish my SPLC guide — the print cataloging is finished, so as soon as I get a chance I will get back to this project.

- I have requested a regular desk shift so that I can stay more connected to the researchers using the collections we work so hard to describe.

- I am working more closely with our curators and collectors on acquisitions and accessioning, including more travel.

- My library is finishing a years-long renovation process, so this summer I will be involved with move-related projects (and celebrations). Hopefully there will be lots of cake for me in 2015.